- Can you use a completely primitive example to tell how machine learning works?

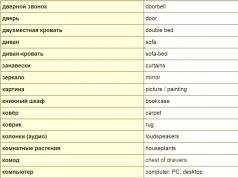

I can. There is an example of a machine learning method called Decision Tree, one of the oldest things. Let's do it now. Let's say an abstract person invites you on a date. What is important to you?

First of all, whether I know him or not...

(Victor writes this on the blackboard.)

…If I don’t know, then I need to answer the question whether he is attractive or not.

And if you know, it doesn't matter? I think I figured it out, it's a branch of the friendzone! In general, I write, if you don’t know and unattractive, then the answer is “yes, no, probably.” If you know, the answer is yes.

- If I know, it is also important!

No, it will be a branch of the friend zone.

Okay, then let's point out here whether it's interesting or not. Nevertheless, when you don’t know a person, the first reaction is to appearance, with a friend we are already looking at what he thinks and how.

Let's do it differently. Ambitious or not. If he is ambitious, then it will be difficult to friend him, he will want more. And the unambitious will suffer.

(Victor completes the decision tree.)

Ready. Now you can predict which guy you are most likely to go on a date with. By the way, some dating services predict such things. By analogy, you can predict how many goods customers will buy, and where people will be at this time of day.

Answers can be not only "yes" and "no", but also in the form of numbers. If you want a more accurate forecast, you can make several such trees and average over them. And with such a simple thing, you can actually predict the future.

Now imagine how difficult it was for people to come up with such a scheme two hundred years ago? Absolutely not! This scheme does not carry any rocket science. As a phenomenon, machine learning has existed for about half a century. Ronald Fisher began to predict based on data at the beginning of the 20th century. He took irises and distributed them along the length and width of the sepals and petals, by these parameters he determined the type of plant.

The machine learning industry has been actively used in recent decades: powerful and relatively inexpensive machines that are needed to process large amounts of data, for example, for such decision trees, appeared not so long ago. But it's still breathtaking: we draw these things for every task and use them to predict the future.

- Well, definitely not better than any octopus predictors of football matches ...

No, well, where do we care about octopuses. Although we have more variability. Now, with the help of machine learning, you can save time, money and improve the comfort of life. Machine learning beat man a few years ago when it came to classifying images. For example, a computer can recognize 20 breeds of terriers, but an ordinary person cannot.

- And when you analyze users, each person is a set of numbers for you?

Roughly speaking, yes. When we work with data, we describe all objects, including user behavior, with a certain set of numbers. And these numbers reflect the peculiarities of people's behavior: how often they take a taxi, what class of taxi they use, what places they usually go to.

Now we are actively building look-alike models to identify groups of people with similar behavior. When we introduce a new service or want to promote an old one, we offer it to those who are interested.

For example, here we have a service - two child seats in a taxi. We can spam everyone with this news, or we can address it only to a certain circle of people. Over the course of the year, we have accumulated a certain number of users who wrote in the comments that they need two child seats. We found them and people like them. Conventionally, these are people over 30 years old who travel regularly and love Mediterranean cuisine. Although, of course, there are much more signs, this is me for an example.

- Even such subtleties?

This is a simple matter. Everything is calculated using search queries.

And in the application it can somehow work? For example, you know that I'm a beggar and subscribe to groups like "How to survive on 500 rubles a month" - they only offer me battered cheap cars, I subscribe to SpaceX news - and I get Tesla from time to time?

It can work this way, but such things are not approved in Yandex, because this is discrimination. When you personalize a service, it’s better to offer not the most acceptable, but the best available and what the person likes. And the distribution according to the logic “this one needs a better car, and this one needs a less good one” is evil.

Everyone has perverted desires, and sometimes you need to find not a recipe for a Mediterranean dish, but, for example, pictures about coprophilia. Will personalization work in this case as well?

There is always a private mode.

If I don’t want anyone to know about my interests or, let’s say, friends come to me and want to watch some trash, then it’s better to use incognito mode.

You can also decide which company's service to use, for example, Yandex or Google.

- Is there a difference?

Complex issue. I don’t know about others, but Yandex is strict with the protection of personal data. Especially supervise employees.

- That is, if I broke up with a guy, I won’t be able to find out if he went to this dacha or not?

Even if you work for Yandex. This, of course, is sad, but yes, it will not be possible to find out. Most employees do not even have access to this data. Everything is encrypted. It's simple: you can't spy on people, it's personal information.

By the way, we had an interesting case on the topic of breaking up with guys. When we did the prediction of point "B" - the destination point in a taxi, we introduced hints. Here look.

(Victor enters the Yandex.Taxi application.)

For example, the taxi thinks I'm at home. He offers me to go either to work or to RUDN University (I give lectures there as part of the course on machine learning Data Mining in Action). And at some point, while developing these hints, we realized that we need not compromise the user. Point "B" anyone can see. For these reasons, we refused to offer places by similarity. And then you sit in a decent place with decent people, order a taxi, and they write to you there: “Look, you haven’t been to this bar yet!”

- What are the blue dots flashing on your map?

These are pickup points. These points show where it is most convenient to call a taxi. After all, you can call in a place where it will be quite inconvenient to call. But in general, you can call anywhere.

- Yes, in any. I somehow flew two blocks with it.

Recently, there have been various difficulties with GPS, which led to various funny situations. People, for example, on Tverskaya, were transferred by navigation across the Pacific Ocean. As you can see, sometimes there are misses and more than two blocks.

- And if you restart the application and poke again, the price changes by a few rubles. Why?

If demand exceeds supply, the algorithm automatically generates a multiplier - this helps those who need to leave as soon as possible to use a taxi, even during periods of high demand. By the way, using machine learning, you can predict where there will be more demand in, for example, an hour. This helps us tell drivers where there will be more orders so that supply matches demand.

- Don't you think that Yandex.Taxi will soon kill the entire taxi market?

I think no. We are for healthy competition and are not afraid of it.

I myself, for example, use different taxi services. The waiting time is important to me, so I look at several applications which taxi will arrive faster.

- You teamed up with Uber. What for?

It's not my place to comment. I think uniting is a profoundly sensible decision.

In Germany, one guy installed a bathtub on drones and so flew for a burger. Have you thought that it is time to master the airspace?

I don't know about air space. We follow the news in the spirit of “Uber launched taxi boats”, but I can’t say anything about the air.

What about drone taxis?

Here is an interesting point. We are developing them, but we need to think about how exactly to use them. It is still too early to predict how and when they will appear on the streets, but we are working hard to develop technology for a fully autonomous car, where a human driver is not needed at all.

- Are there any concerns that the software of drones can be hacked to control the machine remotely?

Risks are always and everywhere where there are technologies and gadgets. But along with the development of technology, another direction is developing - their protection and safety. Everyone who is involved in the development of technology in one way or another is working on protection systems.

- What user data do you collect and how do you protect it?

We collect de-identified usage data, such as where, when and where you traveled. Everything important is hashed.

- Do you think drones will reduce the number of jobs?

I think it will only get bigger. Still, these drones also need to be serviced somehow. This, of course, is a bit of a stressful situation, changing your specialty, but what can you do.

- Gref says at each of his lectures that a person will change his profession at least three times dramatically.

I can't name any specialty that lasts forever. A developer does not work in the same language and with the same technologies all his life. Everywhere needs to be rebuilt. With machine learning, I can clearly feel how guys who are six years younger than me are much faster than me. At the same time, people in their 40s or 45s feel it even more strongly.

Does experience no longer matter?

Plays. But methods change, you can come to an area where, for example, deep learning was not used, you work there for some time, then deep learning methods are introduced everywhere, and you don’t understand anything about it. And that's it. Your experience can only be useful in the matter of team planning, and not always.

- And your profession is a data scientist, is it in demand?

The demand for data scientists is skyrocketing. It is obvious that now is a period of insane hype. Thank God, the blockchain helped a little bit to subside this hype. Blockchain specialists are dismantled even faster.

But many companies now think that if they invest in machine learning, their gardens will immediately bloom. This is not true. Machine learning should solve specific problems, and not just exist.

There are times when a bank wants to make a recommender service system for users. We ask: “Do you think it will be economically justified?” They answer: “Yes, we don’t care. Do it. Everyone has recommender systems, we will be in trend.”

The pain is that something really useful for business cannot be done in one day. We need to see how the system will be trained. And she always works with errors at the beginning, she may lack some data during training. You fix mistakes, then fix them again, and even redo everything. After that, you need to configure it so that the system works in production, so that it is stable and scalable, this is still time. As a result, one project takes six months, a year or more.

If you look at machine learning methods as a black box, then you can easily miss how some nonsense starts to happen. There is a bearded story. The military asked to develop an algorithm that can be used to analyze whether there is a tank in the picture or not. The researchers made, tested, the quality is excellent, everything is great, they gave it to the military. The military come and say that nothing is working. Scientists begin to understand nervously. It turns out that in all the pictures with the tank, which the military brought, there was a check mark in the corner with a pen. The algorithm perfectly learned to find a tick, it knew nothing about the tank. Naturally, there were no checkmarks on the new pictures.

I met children who develop their own dialogue systems. Have you ever thought that you need to cooperate with children?

I have been going to all sorts of events for schoolchildren for a long time, giving lectures about machine learning. And by the way, one of the topics was taught to me by a tenth grader. I was absolutely sure that my story would be good and interesting, proud of myself, I started to broadcast, and the girl was like: “Ah, we want to minimize this thing.” I look and think, but really, why, and the truth can be minimized, and there is nothing special to prove here. Several years have already passed, now she listens to our lectures as a student of Phystech. Yandex, by the way, has a Yandex.Lyceum, where schoolchildren can get basic programming knowledge for free.

- Advise universities and faculties where machine learning is taught now.

There are Moscow Institute of Physics and Technology, faculties of FIVT and FUPM. There is also a wonderful department of computer science at HSE, and machine learning at the Moscow State University. Well, now you can listen to our course at RUDN University.

As I said, this profession is in demand. For a very long time, people who received technical education were engaged in completely different things. Machine learning is a great example, when all the things that people with a technical background learned are now directly needed, useful and well paid.

- How good?

Name the amount.

- 500 thousand per month.

You can, just not being an ordinary data scientist. But in some companies, a very, very trainee can receive 50 thousand for a simple job. There is a very wide spread. In general, the salary of a cool data scientist can be compared with the salary of the CEO of some average company. In many companies, in addition to the salary, a lot of goodies fall on the employee, and if it is clear that the person did not come to write a good brand in the resume, but to really work, then everything will be fine with him.

Almost a year has passed since the start of an unusual subject at the FIFT - an innovative workshop. Its essence is the creation of IT startups by student teams under the guidance of experienced mentors. It turned out not bad: thanks to the course, someone spent part of the summer in Kremieva Valley, someone received a grant in the amount of 800,000 rubles for the development of the project, and ABBYY is ready to completely buy the project from someone. And this is not all the results of the workshop!

At the beginning of 2011, the FIVT third-year students were gathered in the Assembly Hall and told that during the next year you will need to create your own startup. Students took this idea ambiguously: it was not clear how to do it at all, and the responsibility was unusual - after all, it was necessary to create a technology business, and not just another educational project. Here is what Victor Kantor, winner of the MIPT Student Olympiad in Physics, a student of the Yandeska Department, thinks about this:

When I chose the FIVT upon admission, I hoped that we would have something similar. So I'm glad I didn't hope in vain. During the year, it was felt that the course was still being formed, much of it is new, many issues are controversial not only for students, but also for the organizers, but in general, I think, the trends are positive. I liked this course.

To facilitate the work of students, various curators were invited to offer their ideas for building innovative businesses. Among them were completely different people: from senior students and graduate students of the Moscow Institute of Physics and Technology to Ernst & Young's innovation adviser Yuri Pavlovich Ammosov (he was the head of the entire course) and Mikhail Batin, involved in regenerative medicine and life extension issues. As a result, physicists chose the ideas that were most interesting to them, curators attached themselves to the teams, and hard but exciting work began.

In almost a year that has passed since then, the guys have faced many problems, some of which have been resolved. Now you can evaluate their results - despite the difficulties, the guys managed. MIPT students (in addition to the Faculty of Physics, some students of the FAPF and other faculties joined the process) managed to prepare several quite interesting and viable projects:

Askeroid (formerly Ask Droid) - search for smartphones ( Anastasia Uryasheva)

An Android application that allows you to conveniently search in a large number of search engines. Some experts showed interest in the development, and as a result, Anastasia spent the entire past summer in one of the most famous incubators in Silicon Valley - Plug&Play. learning the basics of technology entrepreneurship and talking to international venture capital experts.

1minute.ru - one minute for good (Lev Grunin)

This project allows anyone to simply, quickly and completely free of charge to do charity work. The model is simple: advertisers offer a certain set of activities on the site, users voluntarily participate in them, all advertising money is transferred to a charitable foundation. A week after the launch, the project has collected more than 6,500 users and is not going to stop there. As a result, thanks to Lev and his team, 600 children from orphanages will receive cherished gifts from Santa Claus for the New Year. Have you already spent one minute on a good deed?!

Embedded Desktop - a computer in your phone (Alexey Vukolov)

An application that allows you to combine the capabilities of a computer and the mobility of a phone in one case is an extremely useful product for busy people who often go on business trips. It is enough to install it on a smartphone, and the user will be able to “get” his own computer in any hotel, office, and indeed wherever you can find a monitor (a TV is also suitable), a keyboard and a mouse. The project received a grant for the development of the idea and was presented at the Technovation Cup exhibition, and the team is already actively purchasing equipment with the money received. The American manufacturer of MIPS processors is extremely interested in the development.

Smart Tagger - semantic search through documents (Viktor Kantor)

What if you remember that there was a very important letter somewhere in the mailbox that talked about the latest episode of Big Bang Theory, but you don't remember any of the keywords from the text? Yandex and Google search is powerless. The development of Smart Tagger will come to the rescue - a "smart" program that uses semantic search will give you all the texts, the meaning of which is intertwined with the popular series. The project won a grant at the U.M.N.I.K. total amount of 400,000 rubles!

MathOcr - formula recognition (Viktor Prun)

ABBYY proposed an interesting task for implementation - to create a program that would recognize mathematical formulas of any complexity. FIVT students, having cooperated with interested fopfs, completed the task - the module really recognizes formulas scanned from textbooks on matan or physics. Result: ABBYY is ready to purchase this product for big money.

As part of the ABC AI project jointly with MIPT, we have already written about the so-called ones, which allow you to “grow” programs according to the principles and laws of Darwinian evolution. However, while such an approach to artificial intelligence is, of course, a "guest from the future." But how are artificial intelligence systems created today? How are they trained? Viktor Kantor, Senior Lecturer at the Department of Algorithms and Programming Technologies at Moscow Institute of Physics and Technology, head of the Yandex Data Factory User Behavior Analysis Group, helped us figure this out.

According to a recent report from research firm Gartner, which regularly updates its technology maturity cycle, of all IT, machine learning is at the peak of expectations today. This is not surprising: over the past few years, machine learning has gone out of the sphere of interest of a narrow circle of mathematicians and specialists in the theory of algorithms and has penetrated first into the vocabulary of IT businessmen, and then into the world of ordinary people. Now that there is such a thing as neural networks with their special “magic”, anyone who has used the Prisma app, searched for songs using Shazam, or seen images passed through DeepDream knows.

However, it is one thing to use the technology and another to understand how it works. Common words like “a computer can learn if you give it a hint” or “a neural network consists of digital neurons and is arranged like a human brain” may help someone, but more often they only confuse the situation. Those who are going to seriously engage in math learning do not need popular texts: there are textbooks and excellent online courses for them. We will try to go the middle way: explain how learning actually happens on the simplest possible task, and then show how the same approach can be applied to solve real interesting problems.

How Machines Learn

To begin with, in order to understand exactly how machine learning happens, let's define the concepts. As defined by one of the pioneers in the field, Arthur Samuel, machine learning refers to methods that "allow computers to learn without being programmed directly." There are two broad classes of machine learning methods: supervised learning and unsupervised learning. The first is used when, for example, we need to teach the computer to search for photos with the image of cats, the second - when we need the machine, for example, to be able to independently group news into stories, as happens in services like Yandex.News or Google News. That is, in the first case, we are dealing with a task that implies the existence of a correct answer (the cat in the photo is either there or not), in the second, there is no single correct answer, but there are different ways to solve the problem. We will focus on the first class of problems as the most interesting.

So, we need to teach the computer to make some predictions. And, preferably, as accurate as possible. Predictions can be of two types: either you need to choose between several answer options (is there a cat in the picture or not - this is the choice of one option out of two, the ability to recognize letters in images is the choice of one option out of several dozen, and so on), or make a numerical prediction . For example, predict a person's weight based on their height, age, shoe size, and so on. These two types of problems only look different; in fact, they are solved in almost the same way. Let's try to understand how.

The first thing we need to make a prediction system is to collect the so-called training sample, that is, data on the weight of people in the population. The second is to decide on a set of features, on the basis of which we can draw conclusions about weight. It is clear that one of the most "strong" of these signs will be the growth of a person, therefore, as a first approximation, it is enough to take only it. If weight depends linearly on height, then our prediction will be very simple: a person’s weight will be equal to his height multiplied by some coefficient, plus some constant value, which is written by the simplest formula y \u003d kx + b. All we have to do to train a machine to predict a person's weight is to somehow find the right values for k and b.

The beauty of machine learning is that even if the addiction we are studying is very complex, there is essentially little to change in our approach itself. We will still be dealing with the same regression.

Let's say that a person's height does not affect a person's weight linearly, but to the third degree (which is generally expected, because weight depends on body volume). To take into account this dependence, we simply introduce one more term into our equation, namely the third degree of growth with its own coefficient, while obtaining y=k 1 x+k 2 x 3 +b. Now, in order to train the machine, we will need to find not two, but three values (k 1, k 2 and b). Let's say we also want to take into account the size of a person's shoes, their age, the time they spent watching TV, and the distance from their apartment to the nearest fast food outlet in our prediction. No problem: we just put these features as separate terms in the same equation.

The most important thing is to create a universal way to find the required coefficients (k 1 , k 2 , … k n). If it is, it will be almost indifferent to us which features to use for prediction, because the machine itself will learn to give a lot of weight to important ones, and a small one to unimportant features. Fortunately, such a method has already been invented and almost all machine learning successfully works on it: from the simplest linear models to face recognition systems and speech analyzers. This method is called gradient descent. But before explaining how it works, we need to make a small digression and talk about neural networks.

Neural networks

In 2016, neural networks entered the information agenda so tightly that they almost became identified with any machine learning and advanced IT in general. Formally speaking, this is not true: neural networks are not always used in math learning, there are other technologies. But in general, of course, such an association is understandable, because it is systems based on neural networks that now give the most “magical” results, such as the ability to search for a person by photo, the emergence of applications that transfer the style of one image to another, or systems for generating texts in the manner of speech of a certain person.

The way neural networks are arranged, we already. Here I just want to emphasize that the strength of neural networks compared to other machine learning systems lies in their multilayeredness, but this does not make them something fundamentally different in terms of the way they work. Layering really allows you to find very abstract common features and dependencies in complex sets of features, like pixels in a picture. But it is important to understand that in terms of learning principles, a neural network is not radically different from a set of conventional linear regression formulas, so the same gradient descent method works great here too.

The “strength” of the neural network lies in the presence of an intermediate layer of neurons that combine the values of the input layer summing up. Because of this, neural networks can find very abstract data features that are difficult to reduce to simple formulas like a linear or quadratic relationship.

Let's explain with an example. We settled on a prediction in which a person's weight depends on their height and height cubed, which is expressed by the formula y=k 1 x+k 2 x 3 +b. With some stretch, but in fact, even such a formula can be called a neural network. In it, as in a conventional neural network, there is a first layer of “neurons”, which is also a layer of features: these are x and x 3 (well, the “single neuron” that we keep in mind and for which the coefficient b is responsible). The top, or resultant, layer is represented by a single "neuron" y, that is, the person's predicted weight. And between the first and last layer of "neurons" there are connections, the strength or weight of which is determined by the coefficients k 1 , k 2 and b. To train this "neural network" means simply to find these same coefficients.

The only difference from "real" neural networks here is that we do not have a single intermediate (or hidden) layer of neurons, whose task is to combine input features. The introduction of such layers makes it possible not to invent possible dependencies between the available features, but to rely on their already existing combinations in the neural network. For example, age and average TV time can have a synergistic effect on a person's weight, but, having a neural network, we do not have to know this in advance and enter their product into the formula. There will definitely be a neuron in the neural network that combines the influence of any two features, and if this influence is really noticeable on the sample, then after training this neuron will automatically receive a large weight.

gradient descent

So, we have a training set of examples with known data, that is, a table with accurately measured human weight, and some dependence hypothesis, in this case, linear regression y=kx+b. Our task is to find the correct values of k and b, and not manually, but automatically. And preferably, a universal method that does not depend on the number of parameters included in the formula.

To do this, in general, is easy. The main idea is to create a function that will measure the current total error level and “tweak” the coefficients so that the total error level gradually falls. How to make the error level fall? We need to tweak our parameters in the right direction.

Imagine our two parameters that we are looking for, the same k and b, as two directions on the plane, as the north-south and west-east axes. Each point on such a plane will correspond to a certain value of the coefficients, a certain specific relationship between height and weight. And for each such point on the plane, we can calculate the total level of errors that this prediction gives for each of the examples in our sample.

It turns out something like a specific height on the plane, and the whole surrounding space begins to resemble a mountain landscape. Mountains are points where the error rate is very high, valleys are places where there are fewer errors. It is clear that to train our system means to find the lowest point on the ground, the point where the error rate is minimal.

How can you find this point? The most correct way is to move down all the time from the point where we originally ended up. So sooner or later we will come to a local minimum - a point below which there is nothing in the immediate vicinity. Moreover, it is desirable to take steps of different sizes: when the slope is steep, you can step wider, when the slope is small, it is better to sneak up to the local minimum "on tiptoe", otherwise you can slip through.

This is how gradient descent works: we change the feature weights in the direction of the largest fall in the error function. We change them iteratively, that is, with a certain step, the value of which is proportional to the steepness of the slope. Interestingly, with an increase in the number of features (adding a cube of a person’s height, age, shoe size, and so on), in fact, nothing changes, it’s just that our landscape becomes not two-dimensional, but multidimensional.

The error function can be defined as the sum of the squares of all the deviations that the current formula allows for people whose weight we already know exactly. Let's take some random variables k and b, for example 0 and 50. Then the system will predict for us that the weight of each person in the sample is always 50 kilograms y=0×x+50 On the graph, such a dependence will look like a straight line parallel to the horizontal. It is clear that this is not a very good prediction. Now let's take the deviation in weight from this predicted value, square it (so that negative values are also taken into account) and sum it up - this will be the error at this point. If you are familiar with the beginning of the analysis, then you can even clarify that the direction of the greatest fall is given by the partial derivative of the error function with respect to k and b, and the step is a value that is chosen from practical considerations: small steps take a lot of time to calculate, and large ones can lead to that we will miss the minimum.

Okay, but what if we have not just a complex regression with many features, but a real neural network? How do we apply gradient descent in this case? It turns out that gradient descent works exactly the same with a neural network, only training occurs 1) in stages, from layer to layer, and 2) gradually, from one example in the sample to another. The method used here is called backpropagation and was independently described in 1974 by Soviet mathematician Alexander Galushkin and Harvard University mathematician Paul John Webros.

Although for a rigorous presentation of the algorithm it will be necessary to write out partial derivatives (as, for example, ), on an intuitive level, everything happens quite simply: for each of the examples in the sample, we have a certain prediction at the output of the neural network. Having the correct answer, we can subtract the correct answer from the prediction and thus get an error (more precisely, a set of errors for each neuron of the output layer). Now we need to transfer this error to the previous layer of neurons, and the more this particular neuron of this layer contributed to the error, the more we need to reduce its weight (in fact, we are again talking about taking a partial derivative, about moving along the maximum steepness of our imaginary landscape) . When we have done this, the same procedure must be repeated for the next layer, moving in the opposite direction, that is, from the output of the neural network to the input.

Passing through the neural network in this way with each example of the training sample and “twisting” the weights of the neurons in the right direction, we should eventually get a trained neural network. The backpropagation method is a simple modification of the gradient descent method for multilayer neural networks and therefore should work for neural networks of any complexity. We say “should” here because in fact there are cases when gradient descent fails and does not allow you to do a good regression or train a neural network. It is useful to know what causes such difficulties.

Gradient Descent Difficulties

Wrong choice of absolute minimum. The gradient descent method helps to find a local extremum. But we can't always use it to reach the absolute global minimum or maximum of the function. This happens because when moving along the antigradient, we stop at the moment when we reach the first local minimum that we encounter, and the algorithm stops its work.

Imagine that you are standing on top of a mountain. If you want to descend to the lowest surface in the area, the gradient descent method will not always help you, because the first low point on your way will not necessarily be the lowest point. And if in life you are able to see that it is worth going up a little and you can then go even lower, then the algorithm will simply stop in such a situation. Often this situation can be avoided by choosing the right step.

Wrong step choice. The gradient descent method is an iterative method. That is, we ourselves need to choose the step size - the speed with which we descend. By choosing too large a step, we can fly past the extremum we need and not find the minimum. This can happen if you are facing a very sharp descent. And choosing too small a step threatens with extremely slow operation of the algorithm if we find ourselves on a relatively flat surface. If we again imagine that we are at the top of a steep mountain, then a situation may turn out when, due to a very steep descent near the minimum, we will simply fly over it.

Network paralysis. Sometimes it happens that the gradient descent method fails to find a minimum at all. This can happen if there are flat areas on both sides of the minimum - the algorithm, having hit a flat area, reduces the step and eventually stops. If, standing on top of a mountain, you decide to move to your house in the lowlands, the path may be too long if you accidentally wander onto a very flat area. Or, if there are practically steep “slopes” at the edges of the flat areas, the algorithm, choosing a very large step, will jump from one slope to another, practically without moving to a minimum.

All these complex points must be taken into account when designing a machine learning system. For example, it is always useful to track exactly how the error function changes over time - whether it falls with each new cycle or marks time, how the nature of this fall changes depending on the change in step size. In order to avoid hitting a bad local minimum, it can be useful to start from different randomly chosen points in the landscape - then the probability of getting stuck is much lower. There are many more big and small secrets of dealing with gradient descent, and there are more exotic ways of learning that bear little resemblance to gradient descent. This, however, is already a topic for another conversation and a separate article within the framework of the AI ABC project.

Prepared by Alexander Ershov